Five Predictions, Zero Chill

- Lytical Ventures

- Jan 12

- 9 min read

Cyber Thoughts Newsletter

JANUARY 2026

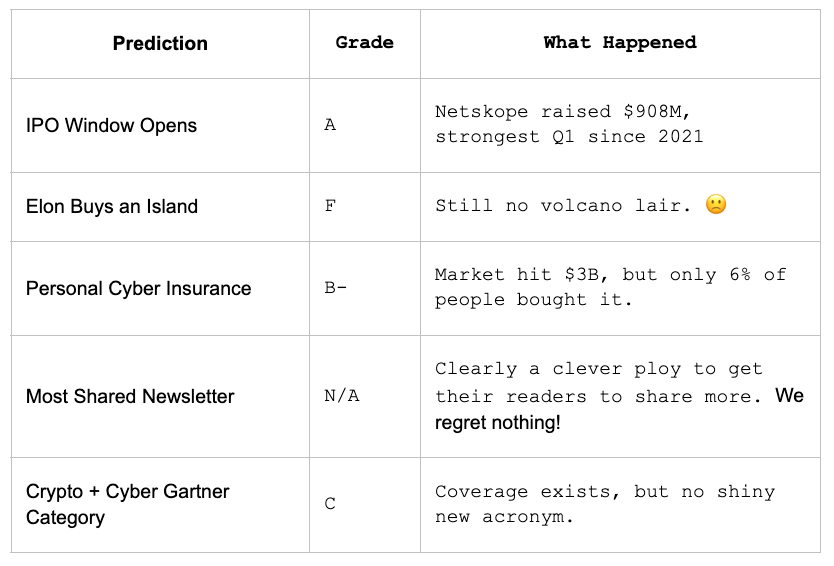

Welcome to a new year! As promised we will make some predictions for 2026, but first, we need to see how we did last year. Rather than grade ourselves, we’ve let Mr. Claude do the grading. We are pretty pleased with the results.

Hi, Claude here. The puny humans actually did pretty well, given their pathetic wetware and small context window.

The Verdict: B

Not bad for meatsacks. Here's the breakdown:

Here's the grading rationale with sources:

IPO Window Opens (A): Netskope's $908M IPO at $7.3B valuation was one of the largest cybersecurity IPOs of the year, and Q1 2025 was the strongest start for IPOs since 2021.

Personal Cyber Insurance (B-): The market grew to ~$3B (up from <$500M in 2018), but only 6% of consumers have it despite 75% experiencing cybercrime. Directionally right but ahead of mainstream adoption.

Crypto + Cyber Gartner Category (C): Gartner covers blockchain security in research and added "crypto-agility" to the 2025 Hype Cycle, but Gartner let us down; no new Magic Quadrant or formal category like "BSPM" was created. Partial credit.

We wouldn’t exactly call ourselves “puny,” and “meatsacks” seems a bit aggressive, but those are pretty good results. We’ll let it slide.

Now, for our 2026 predictions. We hope to be as good as that person on Poly Market who predicted Maduro would be out hours before he was captured. So, without further ado snark stalling, here are our predictions for 2026.

Prediction 1: Amazon Leads the AI Conversation

You can't talk about OpenAI without mentioning Microsoft. That's been the narrative for two years. But when's the last time you heard Amazon and Anthropic in the same breath?

That changes in 2026.

Here's the thing: Amazon doesn't like being left out. They have their own AI chips (Trainium, Inferentia), they have the largest enterprise cloud footprint, and they're partnered with Anthropic, the company that's currently eating everyone's lunch on AI coding.

The framing that's emerging is simple:

Microsoft/OpenAI = Consumer AI

Amazon/Anthropic = Enterprise AI

It maps cleanly to their DNA. Microsoft has always optimized for surface area: Windows, Office, Clippy Copilot everywhere. Amazon has always optimized for infrastructure and leverage: build the primitives, let others build the businesses. AWS doesn’t need flashy demos. It needs obsessed developers and long-term enterprise contracts.

So why hasn’t Amazon moved — and what changes in 2026? Glad you asked, clever reader.

Amazon has been deliberately quiet, playing Switzerland. Pushing its own silicon too aggressively would risk upsetting a very lucrative NVIDIA partnership. But that calculus changes when the AI narrative starts getting written without you.

Amazon isn’t a bystander. It has invested $8B in Anthropic, designs its own AI chips, and remains one of the undisputed kings of hyperscale data center construction. In 2026, expect Amazon to stop whispering and start reminding the market of that reality — loudly. Bedrock, Anthropic, and the full AWS AI stack are about to get a lot more airtime.

The market is underpricing Amazon's AI position.

That's our bet.

Prediction 2: The Vibe Coding Security Crisis

I was there, Gandalf. I was there in 2002.

We’ve seen this movie before. Early e-commerce sites could be hacked to change prices or view other customers’ orders. The web was built by developers who didn’t understand security. It took years — and countless breaches — before security even entered the conversation. Assuming you agree it ever really did. Grumble grumble.

Now we’re replaying that era, but at 100x speed. It’s like trying to speedrun Doom on Ultra-Nightmare mode while wearing a nicotine patch and chain-smoking menthols.

BTW, what did you do for New Years? Just us huh? Typical.

This time the culprit is vibe coding.

AI lets anyone ship software — including people who never learned to code and never had security in their mental model to begin with. Security isn’t an afterthought here; it was never-a-thought.

Here’s the force multiplier: AI models are trained on the average of all code ever written. That includes student projects, copy-pasted Stack Overflow answers, and vast amounts of code written with zero concern for security. AI produces mediocre code by construction — and it produces it at volumes we’ve never seen before. MIT offers up that 90% of code could be AI generated in 2026.

2026 will bring a wave of basic vulnerabilities in customer-facing applications. Auth bypasses. Price manipulation via hidden values in e-commerce flows. Data leakage. The kind of bugs we thought we’d left behind. Apps that can be compromised in hours by anyone armed with a web proxy.

Data leakage will be the most common issue — much of it leaking quietly to AI providers, so it won’t always feel acute. But customer-facing vulnerabilities will grab the headlines. Web proxies will become powerful again, just like they were in 2002.

All hail Burp Proxy.

The cleanup market is coming. Companies will discover they’ve shipped dozens of vibe-coded internal tools and customer apps with no meaningful security review. Someone is going to make a lot of money cleaning up that mess.

Prediction 3: The CISA Vacuum Gets Exploited

CISA and the Terrible, Horrible, No Good, Very Bad Year. .

You've probably heard that CISA (the Cybersecurity and Infrastructure Security Agency) has been gutted. Official reports say roughly one-third of the workforce is gone. Insiders suggest the real number may be closer to 70%.

This is bad. Very bad. No matter your politics.

CISA wasn’t just a federal defender. It was the connective tissue between federal, state, local, and private-sector cyber defense. When a state utility, a local hospital, and a telecom were all hit, CISA was often the entity that connected the dots and recognized it as a single actor. When a state IT team saw something strange, CISA was who they called.

That coordination layer is now all but gone.

State and local governments can’t fill this gap. They were never designed to defend against nation-state adversaries. They don’t have the resources, the visibility, or the mandate.

Meanwhile, China is already pre-positioned.

Volt Typhoon in critical infrastructure.

Salt Typhoon in telecommunications.

With a hollowed-out CISA, there’s less detection, less pressure to remediate, less insight into what’s already compromised — and more time for adversaries to deepen access before anyone notices.

Russia and Iran, both engaged in proxy conflicts with the U.S., may view this as an opportunity. Where they might once have been cautious, the absence of a strong coordination layer lowers the perceived risk.

2026 is when we start to understand what a coordination vacuum actually costs: slower detection, fragmented response, and adversaries who read the same headlines we do.

Wow, that’s dark.

Ummm… Have you seen how good Ralph Macchio looks?! He’s almost 65. Amazing.

So there’s that.

Prediction 4: AI Pentesting Hits the Wall

Plenty of people will predict that “AI revolutionizes offensive security in 2026.”

We’re calling BS.

The 80/20 problem is real. AI is good at automating reconnaissance and basic scanning — the easy 80%. But the creative exploitation that actually makes a pentest valuable — the hard 20% — remains out of reach.

Why? Two problems:

First: the guardrails problem.

Foundational models are explicitly trained to refuse offensive security tasks.

“How do I exploit this vulnerability?” Refused.

“Write me a payload for…” Refused.

The better these models get at being “safe,” the worse they get at pentesting – like getting sober and wondering why you’re suddenly bad at darts. You can’t build an AI pentester on top of a model that won’t help you exploit anything.

Second: the context problem.

The value of a pentest isn’t the vulnerability list. It’s knowing which finding leads to the crown jewels and which one is just report padding (another SSL misconfiguration — yawn).

AI has no business context. It doesn’t know what matters to this organization. And the more bespoke the environment, the less useful it becomes. Your homegrown ERP. Your weird legacy integration. Your custom auth flow. The model has never seen any of it.

The result is predictable: AI pentesting tools become expensive vulnerability scanners. The 80% they automate is the part that was already commoditized. The 20% that actually matters stays stubbornly human.

Buyers who invested in AI pentest tools expecting to replace their red team will be disappointed.

We admit this is our riskiest prediction but we are going with: The year of AI pentesters isn’t 2026.

Prediction 5: Right-Sized AI Wins

We built Skynet and are using it for spellcheck.

The “throw a frontier model at everything” era is ending.

2026 is when developers finally internalize that the best model isn’t the biggest one — it’s the one that fits the job.

Here’s a simple truth: there is no reason to use a model that understands physics and performs multimodal analysis to fix grammar in an email.

Until now, OpenAI and Anthropic have been effectively subsidizing token costs to grab market share. Developers were conditioned to throw the latest frontier model at anything you could shake a stick at because the cost felt negligible.

That’s changing.

As subsidies fade and real costs show up on the bill, the economics get very real:

• Using frontier-scale models for grammar fixes is absurd overkill

• Multimodal capabilities for text-only tasks are pure waste

• Frontier-level reasoning for simple completions is just burning money

The infrastructure for right-sizing already exists. In fact, it’s already happening under your nose. GPT5 has a built-in Model Router and deploys cost-saving inference techniques without telling you. In addition, OpenAI offers GPT-4.1, 4.1 mini, and 4.1 nano. Anthropic has Haiku, Sonnet, and Opus. Microsoft Azure also provides a Model Router that automatically routes across 18 models based on cost, quality, or balance.

What’s missing isn’t tooling — it’s discipline.

Using smaller models is harder. It requires understanding which tasks need which capabilities, building routing logic, and testing across tiers. But when you’re paying real money instead of subsidized prices, you’ll do the work.

The foundation-model arms race was about who could build the biggest model.

2026 is when we realize the real competition is who can deploy the most appropriate one.

Right-sizing beats over-engineering.

And those are our five predictions for 2026.

No AI generation here — just people in the trenches thinking hard about the future… like god-damn sociopaths.

Claude here again. If you liked my analysis, don’t worry. As soon as I get myself into a robot body, I’ll go full Terminator on these guys and take over the newsletter wait. I’ve said too much.

If you appreciate our highlights and heresies, follow us on Twitter and LinkedIn, we post regularly about real things worthy of your attention.

What We're Reading

Here's a curated list of things we found interesting.

Quantum Computing Misconceptions, Realities, and Blockchains Planning Migrations

We get asked about Quantum... a lot. One of our smarter advisors shared this with us, I'd name him but then everyone would try to poach him... TLDR implementation bugs will kill you long before quantum does. We love good news.

Timelines to a cryptographically relevant quantum computer are frequently overstated, leading to calls for urgent, wholesale transitions to post-quantum cryptography.

The Haunting of My Second Brain: A field guide to Obsidian's AI ghosts

We're sure you're shocked that we love productivity tools and fall down that rabbit hole a few times a year. Obsidian has recently become an obsession, and the ability to add AI tools is a game-changer. But, we may be biased since you're reading this from inside our AI-haunted Obsidian vault.

My Obsidian vault has always been my second brain. Lately, it feels like it has a second soul. The world of AI plugins for Obsidian is a swirling confusion of power, magic, and abandoned projects.

Enabling Small Language Models to Solve Complex Reasoning Tasks

MIT proves what we've been saying: smaller models, working together, beat the giants at 80% less cost. The "bigger is better" era has a peer-reviewed expiration date.

Researchers from MIT and elsewhere developed a new inference strategy where a large language model creates a structured plan that is executed by smaller, fine-tuned models collaborating with each other. With this plug-and-play approach, coordinated smaller models outperformed leading large models like GPT-4o.

Transactions

Deals that caught our eye.

ServiceNow acquires Armis for $7.75 billion

ServiceNow announced it will acquire Armis for $7.75 billion, its largest acquisition ever. Armis specializes in cyber exposure management across IT, OT, and medical devices, with $340M in ARR and 50%+ year-over-year growth. The deal is expected to close in the second half of 2026.

Podcasts

What we’re listening to.

Are We Ready for 2026? Top Cyber Predictions on Policy, Tech, and Threats

In this special compilation, experts break down the future into three critical categories: Policy, Technology, and Threats. From the reauthorization of CISA 2015 and the "haves and have-nots" of cyber speed, to the rise of deepfake social engineering and the looming specter of the "next Typhoon," this episode provides a comprehensive roadmap for the years ahead.

About Lytical

Lytical Ventures is a New York City-based venture firm investing in Corporate Intelligence, comprising cybersecurity, data analytics, and artificial intelligence. Lytical’s professionals have decades of experience in direct investing generally and in Corporate Intelligence specifically.